SolrNet is a Solr client for .NET.

I finally managed to close all pending issues and released SolrNet 0.3.0 beta1.

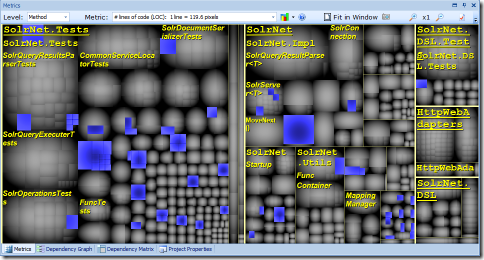

There are quite a few changes and new features. Comparing the last release (already 6 months ago!) to this one using NDepend:

# IL instructions: 16161 to 21394 (+32.6%)

# Types: 210 to 287 (+36.7%)

On to the...

Breaking changes

Even though there were a lot of changes, it's not very likely that any single developer will see more than one or two breaking changes (if any) since nobody uses all features. Here are the details:

Field collapsing changes

What: Field collapsing parameters and results have completely changed.

Who this affects: Everyone using the Field collapsing feature of Solr.

Change required: Until this is properly documented, see the new CollapseParameters and CollapseResults.

Why: Solr changed this completely since it's an unreleased feature.

Changes in ISolrConnection

What: The ISolrConnection interface no longer has the ServerURL and Version properties.

Who this affects: Everyone implementing a decorator for ISolrConnection (e.g. LoggingConnection).

Change required in the application: Remove these properties from your decorator.

Why: They served no purpose in the interface, it's something that belongs to the implementation.

Indexed results (Highlighting, MoreLikeThis)

What: Indexed results are no longer indexed by the document entity. Now they're indexed by the unique key, represented by a string.

Who this affects: Almost everyone using the Highlighting or MoreLikeThis features.

Change required in the application: instead of:

MyDocument doc = ...

ISolrQueryResults<MyDocument> results = ...

results.Highlighting[doc]...

do:

MyDocument doc = ...

ISolrQueryResults<MyDocument> results = ...

results.Highlighting[doc.Id.ToString()]...

Why: Needed to implement loose mapping (see below), was a potential performance hog, didn't add much value anyway.

Highlight results

What: The Highlight results type changed from IDictionary<string, string> to IDictionary<string, ICollection<string>>

Who this affects: Everyone using the Highlighting feature.

Change required in the application: If you were relying on getting only a single snippet, just get the first element in the collection.

Why: the way it was before this change, it wasn't returning multiple snippets.

Chainable methods on ISolrOperations

What: Methods on ISolrOperations that used to return ISolrOperations (i.e. chainable methods) are no longer chainable.

Who this affects: Everyone chaining methods or implementing a decorator for ISolrOperations.

Change required in the application: put each method in its own line. E.g. instead of:

solr.Add(new MyDocument {Text = "something"}).Commit();

do:

solr.Add(new MyDocument {Text = "something"});

solr.Commit();

Why: needed to return the Solr response timings.

Removed NoUniqueKeyException

Who this affects: Everyone catching this exception (very rare) or implementing a custom IReadOnlyMappingManager (also rare)

Change required in the application: If you're catching this exception, check for a null result. If you're implementing a custom IReadOnlyMappingManager, return null instead of throwing.

Why: needed for the mapping validator (see below)

Removed obsolete exceptions

What: Removed obsolete exceptions BadMappingException, CollectionTypeNotSupportedException, FieldNotFoundException

Who this affects: Everyone catching these exceptions (very rare).

Change required in the application: catch SolrNetException instead.

Why: these exceptions were often misleading.

Removed ISolrDocument interface

Who this affects: Everyone implementing this interface in their document types.

Change required in the application: just remove the interface implementation.

Why: no longer needed, it was marked obsolete since 0.2.0

Renamed WaitOptions to CommitOptions

Who this affects: Everyone using the Commit() or Optimize() parameters.

Change required in the application: rename "WaitOptions" to "CommitOptions".

Why: due to recent new features in Solr, these options are now much more than just WaitOptions so the name didn't fit anymore.

New features

Here's a quick overview of the main new features:

LocalParams

This feature lets you add metadata to a piece of query, Solr then interprets this metadata in various ways, for example for multi-faceting

Semi loose mapping

Map fields using a dictionary:

public class ProductLoose {

[SolrUniqueKey("id")]

public string Id { get; set; }

[SolrField("name")]

public string Name { get; set; }

[SolrField("*")]

public IDictionary<string, object> OtherFields { get; set; }

}

Here, OtherFields contains fields other than Id and Name. The key of this dictionary is the Solr field name; the value corresponds to the field value.

Loose mapping

Instead of writing a document class and mapping it using attributes, etc, you can just use a Dictionary<string, object>, where the key is the Solr field name:

Startup.Init<Dictionary<string, object>>("http://localhost:8983/solr");

var solr = ServiceLocator.Current.GetInstance<ISolrOperations<Dictionary<string, object>>>();

solr.Add(new Dictionary<string, object> {

{"id", "id1234"},

{"manu", "Asus"},

{"popularity", 6},

{"features", new[] {"Onboard video", "Onboard audio", "8 USB ports"}}

});

Mapping validation

This feature detects mapping problems, i.e. type mismatches between the .net property and the Solr field

It returns a list of warnings and errors.

ISolrOperations<MyDocument> solr = ...

solr.EnumerateValidationResults().ToList().ForEach(e => Console.WriteLine(e.Message));

HTTP-level cache

As of 1.3, Solr honors the standard HTTP cache headers: If-None-Match, If-Modified-Since, etc; and outputs correct Last-Modified and ETag headers.

Normally, the way to take advantage of this is to set up an HTTP caching proxy (like Squid) between Solr and your application.

With this release, SolrNet includes an optional cache so you don't need to set up that extra proxy. Unfortunately, Solr generates incorrect headers when running distributed searches, so I won't make this a default option until it's fixed. However, if you're not distributing Solr (i.e. sharding), it's perfectly safe to use this cache to get a major performance boost (depending on how often you repeat queries, of course) at the cost of some memory.

To use the cache, you only need to register a component implementing the ISolrCache interface at startup. For example, if you're using Windsor:

container.Register(Component.For<ISolrCache>().ImplementedBy<HttpRuntimeCache>());

The HttpRuntimeCache implementation uses the ASP.NET Cache with a default sliding expiration of 10 minutes.

StructureMap integration

In addition to the built-in container and the Windsor facility and Ninject module, now you can use manage SolrNet with StructureMap. See this article by Mark Unsworth for details.

Index-time field boosting

You can now define a boost factor for each field, to be used at index-time, e.g:

public class TestDocWithFieldBoost

{

[SolrField("text", Boost = 20)]

public string Body { get;set; }

}

Improved multi-core / multi-instance configuration for the Windsor facility

The Windsor facility now has an AddCore() method to help wire the internal components of SolrNet to manage multiple cores/instances of Solr. Here's an example:

var solrFacility = new SolrNetFacility("http://localhost:8983/solr/defaultCore");

solrFacility.AddCore("core0-id", typeof(Document), "http://localhost:8983/solr/core0");

solrFacility.AddCore("core1-id", typeof(Document), "http://localhost:8983/solr/core1");

solrFacility.AddCore("core2-id", typeof(Core1Entity), "http://localhost:8983/solr/core1");

var container = new WindsorContainer();

container.AddFacility("solr", solrFacility);

ISolrOperations<Document> solr0 = container.Resolve<ISolrOperations<Document>>("core0-id");

Of course, you usually don't Resolve() like that (this is just to demo the feature) but you would use service overrides to inject the proper ISolrOperations into your services.

There are some other minor new features, see the changelog for details.

Contributors

I want to thank the following people who contributed to this release (I wish they'd use their real names so I can give them proper credit!):

- Olle de Zwart: implemented the schema validator, delete by id and query in the same request, helped with integration tests.

- mRg: implemented index-time field boosting, new commit/optimize parameters.

- ironjelly2: updated the code to work with the new field collapsing patch.

- Mark Unsworth: wrote the StructureMap adapter.

- mr.snuffle: fixed a performance issue in SolrMultipleCriteriaQuery.

I'd also like to thank everyone who submitted a bug report.

Feel free to join the project's mailing list if you have any questions about SolrNet.